I had a chance to talk to PBS Newshour last week about RFK Jr and the MAHA movement. Check it out!

The deleterious effects of AI are really being felt, and we’re still in the early stages of mainstream companies adopting such technologies. Just this past week, I’ve read about:

YouTube’s new “editable AI-enhanced reply suggestions” creating a sea of AI slop

The closing of a century-and-a-half-old local newspaper in Oregon resulted in a site filled with AI slop, with many readers never realizing what happened

An illegal searchable database of 140,000 movie and TV scripts is being used to train AI models

As with all technologies, it’s not all bad. But it’s definitely not all good either, as health officials and media figures in Australia are intimately realizing.

Deepfake down under

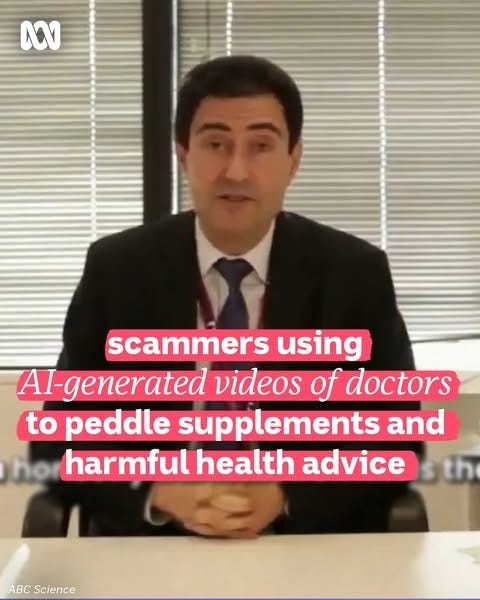

Deepfake AI videos are being advertised as news segments where broadcasters interview doctors to slam prescription medications in order to push supplements.

Jonathan Shaw is a real doctor: he’s the deputy director of the Baker Heart and Diabetes Institute in Melbourne. The broadcaster, Karl Stefanovic, is also real: he’s the co-host for the Today show in Australia and presents for 60 Minutes.

I’m certainly not Australian, but from afar their voices and images seem accurate.

The red flags:

When you look closely, Shaw’s mouth and audio don’t sync perfectly

I’m not sure how doctors talk on national television in Australia, but I don’t know how many would call people “idiots” for taking a common prescription drug

The caveats:

Most people don’t watch social media clips closely

I’ve talked to a number of Australians on Conspirituality, and they assure me America greatly influences their culture; calling people “idiots” might be common

Despite these caveats, the AI video is very good, and the tech keeps getting better.

Like a lot of people, I consume media in online clips. I’m guessing more people see clips than watch entire shows. As they appear in your feed, it’s highly likely you’re not paying attention to the source.

It’s unclear whether or not the supplements manufacturer is behind this video. But the product in question contains berberine, a popular supplement that can cause nausea, constipation, diarrhea, and vomiting. Berberine can also interact with numerous medications, including blood thinners, immunosuppressants, and diabetes medications. Finally, berberine is considered unsafe for pregnant mothers as it can cause a miscarriage or lead to brain damage in newborns.

Further on: the deepfake ad included text claiming it was approved by the Therapeutic Goods Administration, Australia’s FDA. This is false. The product has never been tested.

In Australia, it’s illegal for medical professionals to endorse therapeutic goods. Not everyone knows that, however. According to researchers, this recurring problem is getting worse. The TGA had to remove 4,800 unlawful ads in 2023-24.

It would be great for platforms to vet ads, but that’s not going to happen. Once again it falls back on us to assess information.

A (possible) step forward: I understand the likelihood of mass factchecking on social media is low. That said, here’s my process for posting anything online:

Before posting, I either find another source or go to the publication’s site to confirm what I’m sharing

If there are caveats, I post them in text in the description

If I can’t confirm the source or verify via another outlet, I refrain from posting

Given my role in combating misinformation on Conspirituality, I also implement these practices on my personal feeds. If you care about this topic, taking that extra step is essential.

This played out for me last week when I almost posted a fake manifesto attributed to Luigi Mangione. The text certainly hit an emotional chord. When I tried to verify it, however, nothing came up. The next day it was flagged as fake. I’m glad that I didn’t let my impulse win out.

Algorithms reward impatience. Don’t let a small reward make you regret it in the long run.